Technical SEO: What You Need To Know

Table of Content

Launching a great website only to see traffic stall is frustrating. Even excellent content can lose clicks if search engines can’t see your pages. This is why technical SEO is key for every Australian business.

Technical SEO is the backbone of good search performance. It deals with the backend and site structure tasks. These tasks help search engines crawl, understand and index pages well. Important areas include site speed, website crawlability, mobile readiness, HTTPS security, structured data, canonicalisation, robots.txt, and sitemaps.

Why should you care? Technical problems can block pages from being indexed or ranked, no matter the content quality. Fixing these issues can boost organic visibility, click-through rates, and conversion rates in Australia.

This guide is like a practical product review. We’ll look at each technical area, explain its impact on SEO, highlight common mistakes, and suggest fixes. If you need help with changes, contact hello@defyn.com.au for support.

Key Takeaways

- Technical SEO is the backend work that enables search engines to find and index pages.

- Site speed and website crawlability directly affect rankings and user experience.

- Security (HTTPS), mobile readiness and structured data are essential for visibility.

- Technical issues can negate great content; audits should be routine.

- We provide practical assessments and developer-ready recommendations to fix problems.

Understanding Technical SEO and Why It Matters

Technical SEO is key to making your site visible to search engines. It’s the base that lets search engines find and understand your site. This foundation is vital for your site’s visibility, user experience, and growth in Australia.

Definition and scope of technical SEO

Technical SEO includes site architecture, URL design, and more. It’s about how search engines can access and understand your site. This includes things like robots.txt, XML sitemaps, and HTTPS.

We use tools like Google Search Console and Ahrefs Site Audit to check your site. These tools help us find issues and opportunities.

How technical factors influence search engine optimisation

Technical factors are critical for SEO. Crawlability is key; if search engines can’t find your pages, they won’t show up in results. Robots.txt and noindex tags can block content.

Rendering is also important. If your site’s JavaScript isn’t handled well, it can hide content from search engines. This can lead to indexation issues.

Performance and user experience are vital. Site speed, mobile responsiveness, and Core Web Vitals impact rankings and user engagement. Security, like HTTPS, is also important, showing trust to users.

Differences between technical SEO, on-page and off-page SEO

Technical SEO is different from on-page and off-page SEO. On-page SEO deals with content and meta tags. Off-page SEO focuses on backlinks and external reputation.

Technical SEO is essential for both. It ensures your site’s content and links can be found and valued. Without a solid technical foundation, your content and links won’t work as they should.

- Practical advice: Start with a technical audit before focusing on content.

- Quick checklist: Check if your site is crawlable, fix indexation issues, and ensure HTTPS is secure.

- Team note: Work with developers to fix technical issues found in audits.

| Area | Focus | Common Tool |

|---|---|---|

| Discovery and Crawlability | robots.txt, XML sitemaps, URL structure | Google Search Console |

| Rendering and Indexation | JavaScript rendering, meta robots, canonical tags | Screaming Frog |

| Performance and UX | Page speed, mobile responsiveness, Core Web Vitals | Lighthouse / PageSpeed Insights |

| Security and Integrity | HTTPS, SSL/TLS configuration, mixed content | Bing Webmaster Tools |

Website Crawlability and Indexation Issues

We make sure search engines can find and add our pages. This way, our sites show up where customers search. Good crawlability means important pages are seen. But, poor crawlability causes indexation issues, hiding pages from search results.

How search engine crawlers work

Google and Bing send bots to find URLs. They use links, sitemaps, and indexed pages. These bots fetch resources, render pages, and index content.

For big sites, we manage crawl budget. This keeps servers fast and important pages crawled first.

Common indexation issues and how to detect them

Indexation problems include robots.txt blocks and noindex tags. Canonicalisation errors also cause issues. These problems lead to duplicate content.

Unreachable pages and JavaScript issues also cause problems. We use tools like Screaming Frog or Sitebulb for site crawls. Server logs and Google Search Console help too.

Using Search Console (Google) to monitor crawl status

Search Console’s Coverage report shows valid, excluded, and error pages. The URL Inspection tool provides last crawl details and indexing reasons.

- Check Crawl Stats for error spikes or slow responses.

- Submit sitemaps with canonical URLs for priority indexing.

- Use Inspect URL to request re-indexing after fixes.

Here’s a checklist: check robots.txt, no noindex tags, audit server logs, fix errors, and submit sitemaps. These steps fix many indexation issues. They improve technical SEO and search engine optimisation for Australian sites.

Site Speed Optimisation for Better Rankings

We focus on site speed because fast pages keep users engaged and help search engine optimisation. Slow load times raise bounce rates and harm conversions. Australian audiences expect quick experiences on mobile and desktop, so improving performance is central to technical seo work.

What tools do we use to measure load times?

PageSpeed Insights gives field and lab data with LCP, CLS and FID metrics. Lighthouse in Chrome DevTools offers audits with actionable opportunities. WebPageTest and GTmetrix help with waterfall analysis and geographic testing, which matters for Australian server locations.

Which practical fixes yield the best return?

We prioritise optimisations by impact, using diagnostics from tools like Lighthouse and PageSpeed Insights. Common high-impact items include image work, caching, and critical CSS.

Image optimisation

- Serve next-gen formats such as WebP or AVIF.

- Compress without visible quality loss and use responsive srcset for varied screen sizes.

- Lazy-load offscreen images to reduce initial payload.

Caching and CDN

- Implement server-side caching and set cache-control headers correctly.

- Use a CDN—Cloudflare, Fastly or Akamai—to reduce latency for Australian and nearby users.

- Cache HTML where safe and purge strategically after deployments.

Critical CSS and deferring scripts

- Extract critical CSS to render above-the-fold content faster.

- Minify and compress CSS and JavaScript; remove unused code.

- Defer or async non-critical JS so it does not block rendering.

Server and protocol improvements

- Enable GZIP or Brotli compression to lower transfer sizes.

- Reduce TTFB with better hosting or edge servers located in Australia.

- Adopt HTTP/2 or HTTP/3 for multiplexing and faster resource delivery.

How we validate changes

We test in staging, run before-and-after audits with PageSpeed Insights and WebPageTest, and monitor real user metrics over time. This approach ties technical seo work directly to measurable search engine optimisation gains.

| Focus Area | Key Action | Expected Outcome |

|---|---|---|

| Images | Convert to WebP/AVIF, compress, use srcset, lazy-load | Lower payload, faster LCP, improved mobile experience |

| Caching & CDN | Server caching, set cache headers, deploy CDN in APAC | Reduced latency, better TTFB, consistent page speed for Australian users |

| CSS & JS | Critical CSS, minify, defer non-critical scripts, remove unused code | Faster first render, improved Lighthouse scores, lower CLS |

| Server Protocols | Enable Brotli/GZIP, use HTTP/2 or HTTP/3 | Smaller transfers, parallel requests, faster asset delivery |

| Measurement | Use PageSpeed Insights, Lighthouse, WebPageTest | Prioritised fixes, measurable improvements in search engine optimisation |

Mobile Responsiveness and Core Web Vitals

We focus on two key areas for Australian sites: mobile-first indexing and page experience. Making mobile templates better can boost conversions and keep your site visible. We’ll show you how to check and test on real devices and networks.

Mobile-first indexing: implications for Australian sites

Google mainly indexes the mobile version of a page. It’s important to make sure mobile and desktop content are the same. This keeps search signals consistent.

Action checklist:

- Audit mobile templates for missing headings, meta tags and schema.

- Test pages on common Australian devices and mobile carriers to catch region-specific load issues.

- Reduce or conditionally load heavy third-party scripts on mobile to protect indexing and experience.

Core Web Vitals explained

Core Web Vitals are key for page experience and technical seo. They are measurable metrics we track and improve.

- LCP (Largest Contentful Paint): target ≤2.5 seconds to show fast loading of main content.

- INP (replacing FID): measures interaction responsiveness across sessions; aim to keep interaction delays low.

- CLS (Cumulative Layout Shift): target

Responsive design best practices and testing

Responsive design makes interfaces work well on all devices. We suggest using fluid grids, breakpoint strategies, and flexible images. This keeps your site mobile-friendly.

- Use srcset and responsive image techniques to serve appropriately sized assets.

- Adopt touch-friendly controls, larger tap targets and clear focus states for accessibility.

- Avoid hiding important content or structured data on mobile versions; parity matters for mobile-first indexing.

Testing routine for reliable results

Use synthetic tools and real-device checks on Australian networks. This helps validate improvements in core web vitals and technical seo.

- Run Chrome DevTools device emulation for layout and LCP iterations.

- Use Google’s Mobile-Friendly Test for basic responsiveness and indexing hints.

- Perform real-device tests on common carriers to catch network-dependent delays and script behaviour.

We focus on mobile templates, reduce third-party load on small screens, and aim for LCP and INP improvements. These steps protect your ranking, improve engagement, and make your site work well under mobile-first indexing.

HTTPS Security and Site Integrity

We focus on HTTPS security in our technical seo work. A secure site keeps user data safe, meets payment rules, and boosts trust. For Aussie businesses, HTTPS is key for better search rankings and site strength.

What should we prioritise first?

First, get and keep a valid certificate. Use Let’s Encrypt or a commercial CA for easy renewals. Make sure certificate chains and Subject Alternative Names are correct to avoid browser warnings.

How do we configure servers correctly?

Set up 301 redirects from HTTP to HTTPS at the server level. Use Nginx, Apache, or IIS to show the full certificate chain and disable weak ciphers. Add HSTS after HTTPS is set up to prevent issues.

Which common issues break site integrity?

- Expired certificates cause browser distrust.

- Incorrect chain or missing SAN entries lead to subdomain warnings.

- Mixed content blocks resources, like images and scripts.

How do we detect mixed content and other faults?

Check the browser console, Lighthouse, and SSL Labs for issues. Use automated scans in our technical seo workflow.

What fixes deliver the quickest wins?

- Replace HTTP URLs with HTTPS or use protocol-relative references.

- Update third-party assets to HTTPS or find secure alternatives.

- Automate certificate renewal and set up alerts for expiry.

We follow a checklist to keep our site integrity strong. This reduces downtime, boosts user trust, and supports SEO goals.

| Task | Why it matters | Tools to use |

|---|---|---|

| Obtain valid certificate | Prevents browser warnings and secures transactions | Let’s Encrypt, DigiCert, GlobalSign |

| Automate renewal | Avoids unexpected expiries that harm trust | Certbot, ACME clients, monitoring alerts |

| Enforce 301 redirects | Consolidates signals for search engines and users | Nginx config, Apache .htaccess, IIS rules |

| HSTS with care | Prevents downgrade attacks but risks lockout if misapplied | Browser tests, staged rollout, subdomain checks |

| Resolve mixed content | Ensures all resources load securely for full HTTPS benefits | Lighthouse, browser console, site crawlers |

| SSL/TLS configuration hardening | Improves encryption strength and server compatibility | SSL Labs, Mozilla SSL Config Generator |

Structured Data Markup to Improve SERP Visibility

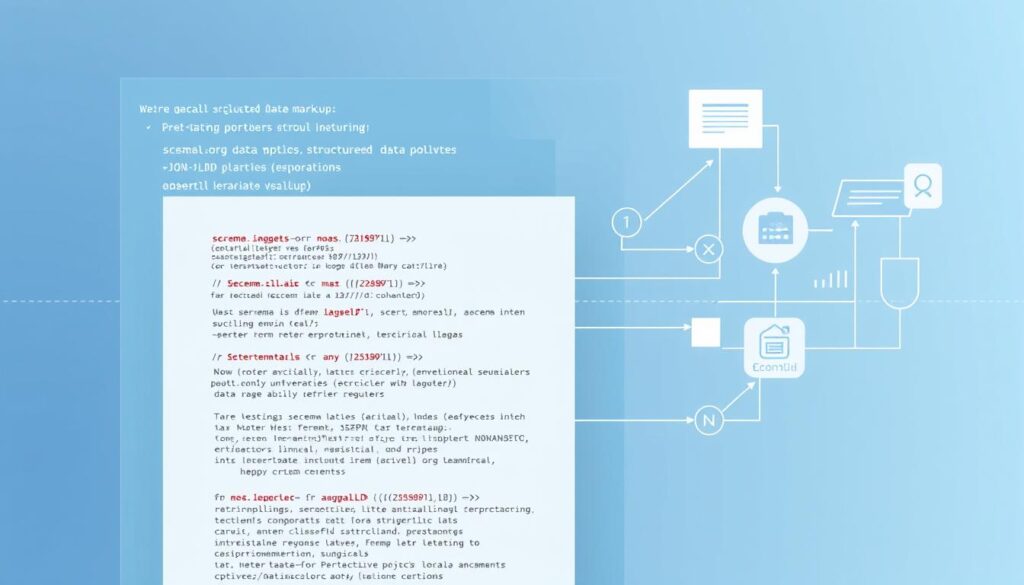

We use structured data markup to help search engines understand our site content better. This is a key part of technical seo. It makes it easier for search engines to see products, reviews, FAQs, and events. This can lead to more traffic and higher click-through rates.

What formats and vocabularies do we choose?

JSON-LD is Google’s top choice. Schema.org vocabularies help define types and properties for things like Product, Review, and Organisation. We add JSON-LD snippets in the page head or body to keep the HTML intact.

Which schema types work best for product and review pages?

- Product — include price, availability, sku and brand to clarify what you sell.

- Offer — pair with Product to show current price and currency; Australian retailers should use AUD and, where relevant, include ABN or other local identifiers.

- AggregateRating — show average scores and review count to enable star snippets.

- Review — include author, reviewRating and datePublished to validate user feedback.

How do we validate and monitor structured data?

- Run Google’s Rich Results Test to check eligibility for rich results and spot errors.

- Use the Structured Data Testing Tool or schema.org validators for syntax checks.

- Monitor Search Console’s Enhancements reports for detected items and remediation advice.

What best practices keep rich results trustworthy?

- Keep structured data consistent with visible content. Don’t mark up information that users cannot see.

- Maintain accurate prices and stock status to prevent misleading search results.

- Avoid mark-up spam and only apply schema to genuine content.

We see structured data markup as a key part of our ongoing technical seo efforts. Regular checks and updates help avoid errors. This ensures Google gets the right signals for better search engine optimisation.

Canonical Tags and Duplicate Content Management

We check how URLs are served and seen by search engines. Using canonical tags correctly stops duplicate content from harming rankings. It also saves crawl budget by reducing indexation issues.

Clear rules for when to use rel=canonical or a 301 redirect make technical seo efficient. This makes it more predictable.

When to use canonical tags vs 301 redirects

Use rel=canonical for pages that are similar but need to stay accessible. This includes parameterised product lists or printable versions. Choose a 301 redirect for pages that have moved permanently or need to be removed.

Canonical tags keep signals together without removing the secondary URL. 301 redirects remove the duplicate URL entirely.

Common canonical tag mistakes and how to avoid them

Be careful of canonicals pointing to non-canonical pages or URLs with 302, 404, or 500 status. Self-referencing errors and canonical loops confuse crawlers. They lead to indexation issues.

Avoid canonicals to URLs blocked by robots.txt or soft-404 pages. We test each canonical after deployment. We check the server response to ensure the target is indexable.

Auditing your site for duplicate content

Run a site crawl with tools like Screaming Frog or Sitebulb. Find duplicate titles, meta descriptions, and near-duplicate body copy. Use Google Search Console to check how parameters are handled and to find indexation issues.

Check hreflang and canonical interactions for multi-language or multi-region sites. This prevents conflicting signals.

Remediation follows a clear checklist:

- Standardise URL structures and remove unnecessary parameters.

- Implement 301s for permanently removed or consolidated pages.

- Apply canonical tags consistently to preferred URLs.

- Update internal links to point to the canonical URL to strengthen signals.

We monitor results after fixes. Regular checks reduce duplicate content problems. This improves site health for ongoing technical seo maintenance.

Robots.txt, Sitemaps and Server Configuration

We focus on keeping sites visible and fast. We take a close look at robots.txt, sitemaps, and server setup. This helps reduce wasted effort and supports better indexation. It’s important to follow clear rules to protect staging areas, guide crawlers, and make pages easy to find.

Robots.txt is a key tool. It helps restrict crawlers from admin panels and staging copies. But, don’t rely on it to hide pages from search. Use noindex for that. Also, include a sitemap link inside robots.txt so search engines find it easily.

Test your directives with Google Search Console’s tester. For Australian sites, avoid blocking mobile resources by mistake. Make sure mobile CSS and JS are open to crawlers, as geo-targeted hosting can affect crawl patterns.

Good sitemaps help engines find important pages. Include only canonical URLs and add lastmod tags. Split sitemaps if you have over 50,000 URLs or large file sizes. For big commerce sites, separate product, category, and image sitemaps to show priority.

Submit sitemaps to Search Console and Bing Webmaster Tools. Update them when key content changes. Keep low-value pages out of sitemaps to focus on pages that matter.

Server setup affects how often bots return. Correct 301 and 302 headers to keep link equity. Set cache-control and ETag headers to reduce server load. Enable GZIP or Brotli to shrink responses and cut time to first byte.

Monitor response codes and fix 4xx and 5xx errors quickly. Slow TTFB harms both users and crawl budget. Tackle infrastructure bottlenecks with your hosting provider or platform team.

Manage crawl budget by blocking low-value paths and limiting parameter permutations. Use robots.txt and canonical tags together to reduce duplicate crawling. Prioritise high-value pages in internal links and place them prominently in sitemaps.

Quick checklist for ongoing maintenance:

- Validate robots.txt regularly with Search Console.

- Ensure sitemaps list canonical URLs and include lastmod.

- Split large sitemaps and submit them to major webmaster tools.

- Enable compression and set proper cache headers.

- Track response codes and TTFB to protect crawl budget.

| Area | Key Action | Expected Impact |

|---|---|---|

| robots.txt | Block admin/staging; include sitemap URL; validate directives | Cleaner crawl, fewer wasted requests, safer staging |

| sitemaps | Use canonical URLs; add lastmod; split large files; submit to consoles | Faster discovery of priority pages and better indexation |

| Server configuration | Correct 301/302; set cache-control/ETag; enable GZIP/Brotli | Lower bandwidth, faster TTFB, improved crawl efficiency |

| Crawl budget | Block low-value paths; reduce parameter variants; prioritise links | More frequent crawling of revenue-driving pages |

| Monitoring | Track 4xx/5xx, use Search Console reports, run periodic audits | Quick fixes reduce indexation loss and maintain technical seo health |

Monitoring, Auditing and Ongoing Technical SEO Maintenance

We keep a regular check on site health and tackle technical issues early. This ensures search engines index the right pages and users have a smooth experience on all devices.

Choosing the right tools and their frequency is key. We use automated crawls with tools like Screaming Frog to spot errors. Lighthouse and PageSpeed Insights help us track performance. Search Console gives us updates on indexing and crawl errors.

What metrics are important? We focus on organic impressions, clicks, and indexed pages. We also keep an eye on crawl errors, structured data issues, and mobile usability. Core Web Vitals, TTFB, and uptime stats are monitored too.

How do we schedule these checks? Our reporting schedule is straightforward:

- Weekly: automated crawls for critical issues and performance drops.

- Monthly: full site audits covering content, links, and technical fixes.

- Quarterly: strategic reviews that align with business goals.

How do we link audits to deployment? We add tests to our CI/CD pipelines. Automated crawls and Lighthouse audits run on staging. Redirect checks and sitemap generation are part of the pre-deploy process.

How do teams work together? We have a documented change control process for SEO updates. We use feature flags for testing and the technical team fixes issues. Content optimisation and product testing follow.

What makes good reporting? Reports combine site audits with trend lines and an action list for developers. This keeps everyone informed and focused on impactful fixes.

Conclusion

Technical seo is key for good search engine optimisation. Without website crawlability, indexation, speed, security, and structured data, content and link-building won’t work well. Fixing these issues first gives the best results.

Begin with a detailed technical audit to find and fix crawl and indexation problems. Make sure your site uses HTTPS, improves mobile speed, and includes the right structured data. Use tools like Google Search Console and PageSpeed Insights to check your work.

Keep an eye on your site’s performance with regular checks and clear metrics. This way, you can avoid going back to old problems. For help with technical seo or setting up your site, email hello@defyn.com.au.